All Categories

Featured

Table of Contents

Generative AI has business applications past those covered by discriminative designs. Let's see what basic designs there are to make use of for a vast array of issues that obtain outstanding results. Numerous algorithms and relevant models have actually been established and trained to produce new, sensible content from existing information. Some of the models, each with distinct mechanisms and capacities, go to the forefront of improvements in areas such as image generation, message translation, and data synthesis.

A generative adversarial network or GAN is a maker knowing framework that places both neural networks generator and discriminator against each other, thus the "adversarial" component. The competition in between them is a zero-sum video game, where one representative's gain is another representative's loss. GANs were created by Jan Goodfellow and his coworkers at the College of Montreal in 2014.

The closer the outcome to 0, the most likely the result will be fake. The other way around, numbers closer to 1 show a greater chance of the forecast being actual. Both a generator and a discriminator are commonly applied as CNNs (Convolutional Neural Networks), especially when functioning with images. The adversarial nature of GANs exists in a video game theoretic scenario in which the generator network have to complete against the foe.

Can Ai Improve Education?

Its enemy, the discriminator network, tries to identify between samples drawn from the training information and those drawn from the generator - Conversational AI. GANs will be considered effective when a generator develops a phony example that is so convincing that it can trick a discriminator and humans.

Repeat. Explained in a 2017 Google paper, the transformer architecture is a maker learning structure that is extremely efficient for NLP all-natural language handling tasks. It finds out to find patterns in consecutive data like created message or spoken language. Based upon the context, the model can anticipate the next element of the collection, as an example, the next word in a sentence.

How Is Ai Used In Healthcare?

A vector represents the semantic qualities of a word, with similar words having vectors that are enclose value. As an example, the word crown could be represented by the vector [ 3,103,35], while apple could be [6,7,17], and pear may look like [6.5,6,18] Certainly, these vectors are just illustratory; the real ones have lots of more measurements.

At this stage, information about the setting of each token within a sequence is included in the type of one more vector, which is summarized with an input embedding. The result is a vector mirroring words's first meaning and placement in the sentence. It's then fed to the transformer semantic network, which consists of two blocks.

Mathematically, the relations in between words in a phrase appear like ranges and angles between vectors in a multidimensional vector area. This device has the ability to find subtle means also remote information elements in a series influence and depend upon each various other. In the sentences I put water from the pitcher into the cup till it was complete and I poured water from the pitcher into the mug up until it was empty, a self-attention device can differentiate the meaning of it: In the former case, the pronoun refers to the cup, in the latter to the bottle.

is utilized at the end to compute the chance of various outputs and choose one of the most potential alternative. Then the generated output is appended to the input, and the entire process repeats itself. The diffusion version is a generative version that develops new information, such as photos or audios, by simulating the information on which it was educated

Think about the diffusion design as an artist-restorer who studied paintings by old masters and now can repaint their canvases in the same design. The diffusion version does approximately the exact same thing in 3 major stages.gradually introduces sound right into the original image up until the outcome is simply a chaotic collection of pixels.

If we return to our example of the artist-restorer, straight diffusion is dealt with by time, covering the painting with a network of cracks, dirt, and grease; often, the painting is revamped, including specific information and getting rid of others. is like researching a painting to grasp the old master's initial intent. AI coding languages. The version meticulously analyzes just how the added sound changes the information

How Does Ai Impact The Stock Market?

This understanding enables the version to effectively turn around the process in the future. After finding out, this design can rebuild the altered data via the procedure called. It begins with a sound sample and gets rid of the blurs step by stepthe same means our musician obtains rid of pollutants and later paint layering.

Unrealized representations consist of the fundamental components of data, allowing the model to regrow the initial info from this inscribed significance. If you alter the DNA particle just a little bit, you obtain a completely various organism.

Chatbot Technology

State, the woman in the second leading right picture looks a bit like Beyonc but, at the exact same time, we can see that it's not the pop vocalist. As the name suggests, generative AI changes one kind of photo right into one more. There is a range of image-to-image translation variations. This job involves drawing out the style from a popular paint and using it to an additional photo.

The result of making use of Steady Diffusion on The outcomes of all these programs are pretty similar. Nevertheless, some users keep in mind that, usually, Midjourney attracts a bit a lot more expressively, and Steady Diffusion follows the request extra plainly at default settings. Scientists have likewise used GANs to create synthesized speech from text input.

How Is Ai Used In Sports?

The primary task is to execute audio analysis and produce "vibrant" soundtracks that can transform depending on exactly how individuals interact with them. That claimed, the songs may change according to the ambience of the video game scene or relying on the intensity of the user's exercise in the health club. Review our short article on discover more.

Realistically, video clips can likewise be generated and transformed in much the same method as photos. While 2023 was noted by developments in LLMs and a boom in photo generation innovations, 2024 has actually seen significant advancements in video generation. At the beginning of 2024, OpenAI presented an actually excellent text-to-video version called Sora. Sora is a diffusion-based design that generates video from static noise.

NVIDIA's Interactive AI Rendered Virtual WorldSuch synthetically developed information can aid develop self-driving vehicles as they can use created digital world training datasets for pedestrian discovery. Of program, generative AI is no exception.

Considering that generative AI can self-learn, its behavior is hard to regulate. The outputs given can usually be much from what you anticipate.

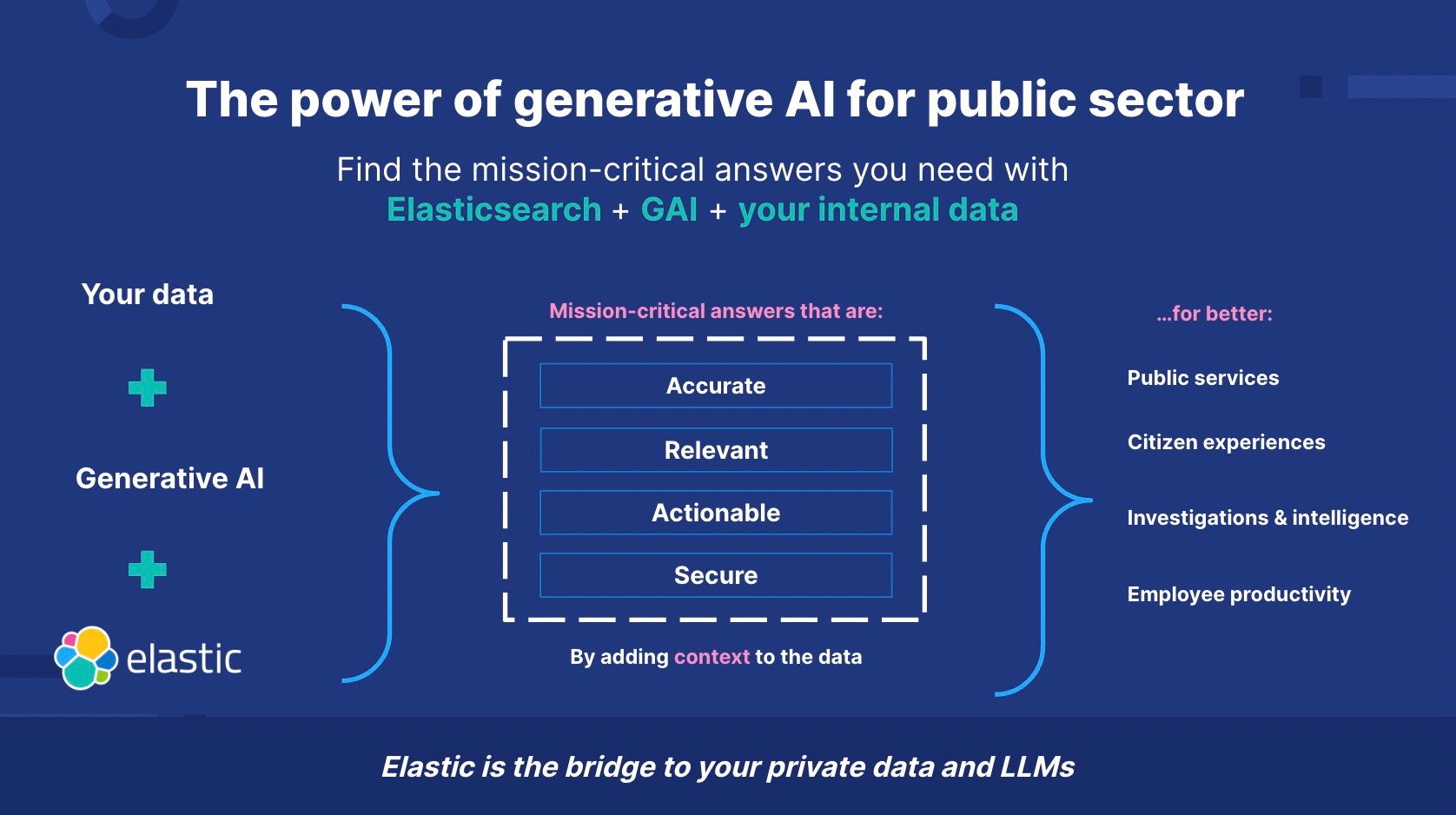

That's why so many are applying dynamic and smart conversational AI versions that clients can communicate with through message or speech. In enhancement to customer solution, AI chatbots can supplement advertising and marketing initiatives and support inner interactions.

What Is The Difference Between Ai And Ml?

That's why a lot of are implementing dynamic and intelligent conversational AI versions that consumers can engage with through text or speech. GenAI powers chatbots by recognizing and creating human-like message feedbacks. Along with client service, AI chatbots can supplement advertising and marketing initiatives and support internal communications. They can also be integrated right into sites, messaging apps, or voice assistants.

Latest Posts

What Are The Applications Of Ai In Finance?

Ethical Ai Development

How Does Ai Help Fight Climate Change?